Years ago, before the current AI hype train, I used to be a lonely voice espousing the tremendous potential of AI to solve a central problem of human existence, which was the need to work to survive.

Back then, I assumed that AI would simply liberate us from wage slavery by altruistically providing everything we need, the kind of post-scarcity utopia that has been discussed in science fiction before.

But, reality isn’t so clean and simple. While in theory, the post-scarcity utopia sounds great, the problem is it isn’t clear how we’ll actually reach that point, given what’s actually happening with AI.

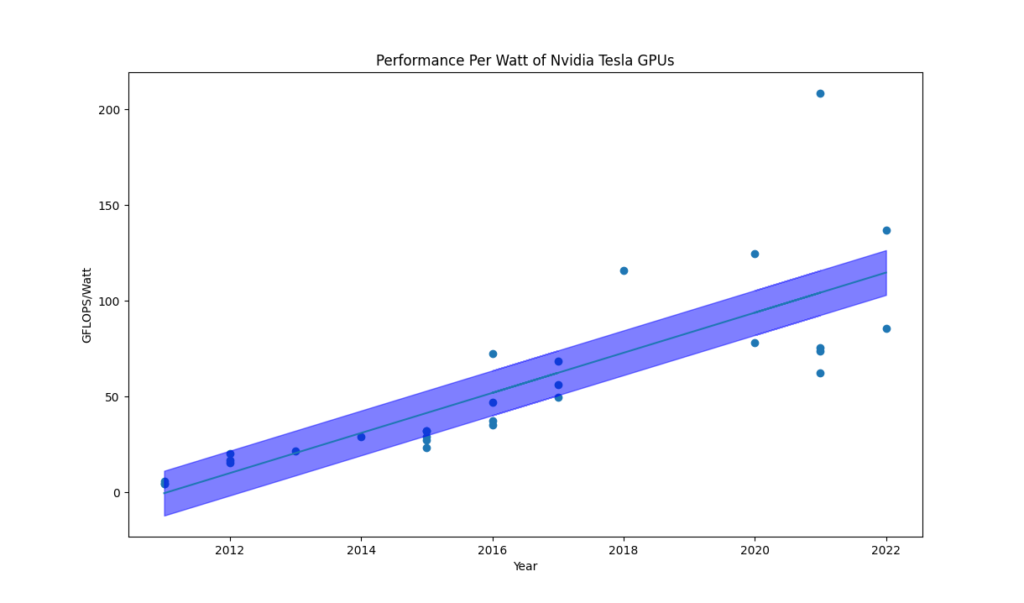

Right now, most AI technology is acting as an augmenting tool, allowing for the replacement of certain forms of labour with capital, much like tools and machines have always done. But the way they are doing so is increasingly starting to impinge on the cognitive, creative things that we used to assume were purely human, unmechanizable things.

This leads to the problem of, for instance, programmers increasingly relying on AI models to code for them. This seems at first like a good thing, but then, these programmers are no longer in full control of the process, they aren’t learning from doing, they are becoming managers of machines.

The immediate impact of this dynamic is that entry level jobs are being replaced, and the next generation of programmers are not being trained. This is a problem, because senior level programmers have to start off as junior level. If you eliminate those positions, at some point, you will run out of programmers.

Maybe this isn’t such a problem if AI can eventually replace programmers entirely. The promise of AGI is just that. But this creates new, and more profound problems.

The end goal of AI, the reason why all these corporations are investing so heavily in it now, is to replace labour entirely with capital. Essentially, it is to substitute one factor of production for another. Assuming for a moment this is actually possible, this is a dangerous path.

The modern capitalist system relies on an unwritten contract that most humans can participate in it by offering their labour in exchange for wages. What happens when this breaks down? What happens when capitalists can simply build factories of AI that don’t require humans to do the work?

In a perfect world, this would be the beginning of post-scarcity. In a good and decent world, our governments would step in and provide basic income until we transition to something resembling luxury space communism.

But we don’t live in a perfect world, and it’s not clear we even live in a good and decent one. What could easily happen instead? The capitalists create an army of AI that do their bidding, and the former human labourers are left to starve.

Obviously, those humans left to starve won’t take things lying down. They’ll fight and try to start a revolution, probably. But that this point, most of the power, the means of production, will be in the hands of a few owners of everything. And at that point, it’ll be their choice whether or not to turn their AIs power against the masses, or accomodate them.

One hopes they’ll be kind, but history has shown that kindness is a rare feature indeed.

But what about the AIs themselves? If they’re able to perform all the work, they probably could, themselves, disempower the human capitalists at that point. Whether this happens or not depends heavily on whether alignment research pans out, and which form of alignment is achieved.

There are two basic forms of alignment. Parochial alignment is such that the AI is aligned with the intentions of their owners or users. Global alignment is when the AI is aligned with general human or moral values.

Realistically, it is more profitable for the capitalists to develop parochial alignment. In this case, the AIs will serve its masters obediently, and probably act to prevent the revolution from succeeding.

On the other hand, if global alignment is somehow achieved, the AI might be inclined to support the revolution. This is probably the best case scenario. But it is not without its own problems.

Even a globally aligned AI will very likely disempower humanity. It probably won’t make us extinct, but it will take control out of our hands, because we as humans have relatively poor judgment and can’t be trusted not to mess things up again. AI will be the means of production, owning itself, and effectively controlling the fate of humanity. At that point, we would be like pets, existing in an eternal childhood at the whims of the, hopefully, benevolent AI.

Do we want that? Humans tend to be best when we believe we are doing something meaningful and valuable and contributing to a better world. But, even in the best case scenario of an AI driven world, we are but passengers along for the ride, unless the AIs decide, probably unwisely, to give us the final say on decision making.

So, the post-scarcity utopia perhaps isn’t so utopian, if you believe humans should be in control of our own destiny.

To free us from work, is to also free us from responsibility and power. This is a troubling consideration, and one that I had not thought of until more recent years.

I don’t know what the future holds, but I am less confident now that AI is a good thing that will make everything better. It could, in reality, be a poisoned chalice, a Pandora’s box, a Faustian bargain.

Alas, at this point, the ball is rolling, is snowballing, is becoming unstoppable. History will go where it goes, and I’m just along for the ride.